- Using much larger data sets, to increase the accuracy.

- Making sure all the tests are consistent, and that the playing field is level.

- Making the benchmarks repeatable, not relying on invariants that GHC does not promise.

- Understanding the precise results, I want to know why a particular benchmark gives the results it does.

- Improving the performance, where increased understanding enables this.

1) I've increased the default data set to 45Mb. This should give more accurate results. I've also benchmarked on a variety of computers, and found that the relative difference between processor and disk speed makes a massive difference. All the results given here are from my work machine. On my home machine Supero is up to 4 times faster than GHC!

2) It turned out that using -fvia-C meant that the gcc back end to GHC was inlining some primitive functions. I've now moved to using -fasm with ghc, which results in no inlining. I've also set appropriate definitions in the C to turn of inlining of their functions. With all these steps, no programming language does any inlining inside getchar() or isspace(). Since all programs do exactly the same number of calls to each function, this should not benefit/penalise any language, but is more consistent.

3) I've moved from using my fake version of the IO Monad, to using GHC's IO Monad. This change means that GHC no longer attempts to optimise my computations into constants, and means that future versions of GHC are guaranteed to behave in much the same way. This also has the side effect that the code should be faster (it isn't, discussed next, but it should be!)

4) With more detailed and repeatable benchmarks, I've started to look for the precise reasons why a particular benchmark performs as it does. In doing so I've noticed that GHC can place heap checks in the wrong place, sometimes fails to infer the correct strictness information and has too many stack checks. I have reported each of these issues. The benchmarks are performed with these problems present in the Supero results. As far as I am able to tell, if these three issues were solved, Supero would always obtain the same speed as C (with the same assembly code), or outperform C (in one case).

5) I discovered an issue with the words function, which I have brought to the attention of the Haskell library maintainers. The words function as currently in the libraries performs two redundant isspace tests per word detected. The fix is simple, and has been applied to the libraries Supero uses. Note that the GHC result has not had this fix applied, so could be expected to improve.

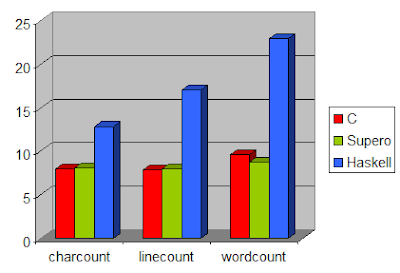

With all these modifications, it only remains to post updated benchmarks:

The benchmarks follow an expected pattern for character counting and line counting. The C version is minimally faster than the Supero version, 1.5% faster in both cases. The Haskell version lags substantially further behind.

The one benchmark where this is not the case is word count. In this benchmark Supero is 10% faster than C! This even includes the overheads of missed strictness, excessive heap checks and excessive stack checks. Obviously this is a surprising result, so deserves more explanation. The C code which performs word counting is:

int main() {

int i = 0;

int c, last_space = 1, this_space;

while ((c = getchar()) != EOF) {

this_space = isspace(c);

if (last_space && !this_space)

i++;

last_space = this_space;

}

printf("%i\n", i);

return 0;

}

There are essentially two states - traversing through a sequence of spaces, or traversing through a sequence of non-spaces. Depending on which state you are in, and where you are transitioning to, you may need to increment a counter. The C code maintains this state information in last_space.

However, this is not the fastest method. If that 1-bit of information was encoded in the program counter, i.e. by having different paths for being in a sequence of spaces vs non-spaces, the code can be further optimised: the last_space variable becomes redundant; the increment test can be eliminated in one branch.

To implement two tight inner loops in C, where control can bounce between the loops, is not trivial. One approach is to use goto, but this often disables some optimisations. The other approach is to have nested while loops, with a return to exit the inner loop. Either way is unpleasant, and unnatural.

Contrast this low-level hackery, with the Haskell version:

main = print . length . words =<< getContents

The code is specified in a high-level manner. By running this code through Supero, it automatically produces the necessary pair of tight loops, with transitions between them, using tail calls. GHC takes this code and produces directly looping code, which is able to outperform the C equivalent.

My next step is to take the Supero program, and try it on a larger range of benchmarks. I hope to tackle the nobench suite, competing against other Haskell compilers, rather than against C.