I've been working on optimisation techniques for the last week, as one chapter of my PhD is likely to be on that topic. The approach I'm using is to take Yhc.Core, optimise it at the Core level, then spit out GHC Haskell which can then be compiled. The optimisation technique I've been working on can best be described as "deforestation on steroids" - its entirely automatic (no notion of special named functions like in foldr/build etc) and isn't too expensive.

The particular benchmark I've been working on is "print . length =<< getContents" or "wc -c". In order to make it a fair comparison, I require that all implementations

Writing this benchmark in C is quite easy:

#include "stdio.h"

int main()

{

int i = 0;

while (getchar() != EOF)

i++;

printf("%i\n", i);

return 0;

}

Writing in Haskell, forcing the use of getchar, is a little bit more tricky:

{-# OPTIONS_GHC -fffi #-}

import Foreign.C.Types

import System.IO

import System.IO.Unsafe

foreign import ccall unsafe "stdio.h getchar" getchar :: IO CInt

main = print . length =<< getContents2

getContents2 :: IO String

getContents2 = do

c <- getchar

if c == (-1) then return [] else do

cs <- unsafeInterleaveIO getContents2

return (toEnum (fromIntegral c) : cs)

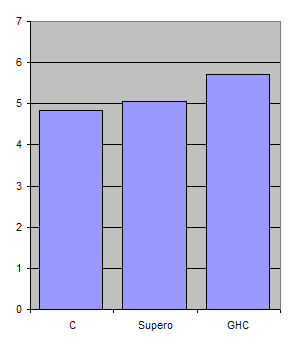

I then benchmarked the C on -O3, versus the Haskell going through my optimiser, and going through GHC on -O2. The benchmark was counting the characters in a 35Mb file, run repeatedly, as usual:

The actual numbers are C=4.830, Supero=5.064, GHC=5.705. This makes Supero 5% slower than C, and GHC 18% slower than C. The reason that Supero is faster is because its managed to deforest the length/getContents pair. The inner loop in Supero allocates no data at all, compared to the GHC one which does. Supero allocates 11,092 bytes in the heap, GHC allocates 1,104,515,888.

The actual numbers are C=4.830, Supero=5.064, GHC=5.705. This makes Supero 5% slower than C, and GHC 18% slower than C. The reason that Supero is faster is because its managed to deforest the length/getContents pair. The inner loop in Supero allocates no data at all, compared to the GHC one which does. Supero allocates 11,092 bytes in the heap, GHC allocates 1,104,515,888.This benchmark is not a particularly good one - the task is simple, the computation is minimal and most expense is spent inside the getchar function. It is however a good place to start, and shows that Haskell can get very close to C speeds - even using standard type String = [Char] processing. Supero has made global optimisations that GHC was unable to, and does not have any techniques with which it could acheive the same results. GHC has then taken the Supero output and automatically identified strictness, performed unboxing/inlining, and done lots of other useful low-level optimisations.

I'm going to move on to "wc -l" next, and hopefully onto the nobench suite. The techniques used in Supero are all general, and I hope that as the complexity of the problem increases, the payoff will do so too.

Wow. That's some exciting stuff.

ReplyDeleteI'm really really eager to see the 'wc -l' results.

> The particular benchmark I've been working on is "print . length =<< getContents" or "wc -l".

ReplyDelete"wc -l" should probably be "wc -m" or "wc -c".

Any details on your deforestation technique?

Anon: I did initial results last night, I suspect the results will be GHC takes around 4 times as long as C, mine is the same speed - i.e. mine is 4 times faster.

ReplyDeletestefanha: Blogger ate that sentance several times, it was right the first time! No details yet, but once the algorithms etc have settled I'll explain them properly.