Summary: I've written a continuous integration system, in Haskell, designed for large projects. It works, but probably won't scale yet.

I've just released bake, a continuous integration system - an alternative to Jenkins, Travis, Buildbot etc. Bake eliminates the problem of "broken builds", a patch is never merged into the repo before it has passed all the tests.

Bake is designed for large, productive, semi-trusted teams:

- Large teams where there are at least several contributors working full-time on a single code base.

- Productive teams which are regularly pushing code, many times a day.

- Semi-trusted teams where code does not go through manual code review, but code does need to pass a test suite and perhaps some static analysis. People are assumed to be fallible.

Current state: At the moment I have a rudimentary test suite, and it seems to mostly work, but Bake has never been deployed for real. Some optional functionality doesn't work, some of the web UI is a bit crude, the algorithms probably don't scale and all console output from all tests is kept in memory forever. I consider the design and API to be complete, and the scaling issues to be easily fixable - but it's easier to fix after it becomes clear where the bottleneck is. If you are interested, take a look, and then email me.

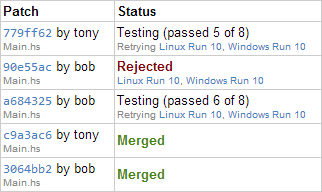

To give a flavour, the web GUI looks of a running Bake system looks like:

The Design

Bake is a Haskell library that can be used to put together a continuous integration server. To run Bake you start a single server for your project, which coordinates tasks, provides an HTTP API for submitting new patches, and a web-based GUI for viewing the progress of your patches. You also run some Bake clients which run the tests on behalf of the server. While Bake is written in Haskell, most of the tests are expected to just call some system command.

There are a few aspects that make Bake unique:

- Patches are submitted to Bake, but are not applied to the main repo until they have passed all their tests. There is no way for someone to "break the build" - at all points the repo will build on all platforms and all tests will pass.

- Bake scales up so that even if you have 5 hours of testing and 50 commits a day it will not require 250 hours of computation per day. In order for Bake to prove that a set of patches pass a test, it does not have to test each patch individually.

- Bake allows multiple clients to run tests, even if some tests are only able to be run on some clients, allowing both parallelisation and specialisation (testing both Windows and Linux, for example).

- Bake can detect that tests are no longer valid, for example because they access a server that is no longer running, and report the issue without blaming the submitted patches.

An Example

The test suite provides both an example configuration and commands to drive it. Here we annotate a slightly simplified version of the example, for lists of imports see the original code.

First we define an enumeration for where we want tests to run. Our server is going to require tests on both Windows and Linux before a patch is accepted.

data Platform = Linux | Windows deriving (Show,Read)

platforms = [Linux,Windows]

Next we define the test type. A test is something that must pass before a patch is accepted.

data Action = Compile | Run Int deriving (Show,Read)

Our type is named Action. We have two distinct types of tests, compiling the code, and running the result with a particular argument. Now we need to supply some information about the tests:

allTests = [(p,t) | p <- platforms, t <- Compile : map Run [1,10,0]]

execute :: (Platform,Action) -> TestInfo (Platform,Action)

execute (p,Compile) = matchOS p $ run $ do

cmd "ghc --make Main.hs"

execute (p,Run i) = require [(p,Compile)] $ matchOS p $ run $ do

cmd ("." </> "Main") (show i)

We have to declare allTests, then list of all tests that must pass, and execute, which gives information about a test. Note that the test type is (Platform,Action), so a test is a platform (where to run the test) and an Action (what to run). The run function gives an IO action to run, and require specifies dependencies. We use an auxiliary matchOS to detect whether a test is running on the right platform:

#if WINDOWS

myPlatform = Windows

#else

myPlatform = Linux

#endif

matchOS :: Platform -> TestInfo t -> TestInfo t

matchOS p = suitable (return . (==) myPlatform)

We use the suitable function to declare whether a test can run on a particular client. Finally, we define the main function:

main :: IO ()

main = bake $

ovenGit "http://example.com/myrepo.git" "master" $

ovenTest readShowStringy (return allTests) execute

defaultOven{ovenServer=("127.0.0.1",5000)}

We define main = bake, then fill in some configuration. We first declare we are working with Git, and give a repo name and branch name. Next we declare what the tests are, passing the information about the tests. Finally we give a host/port for the server, which we can visit in a web browser or access via the HTTP API.

Using the Example

Now we have defined the example, we need to start up some servers and clients using the command line for our tool. Assuming we compiled as bake, we can write bake server and bake client (we'll need to launch at least one client per OS). We can view the state by visiting http://127.0.0.1:5000 in a web browser.

To add a patch we can run bake addpatch --name=cb3c2a71, using the SHA1 of the commit, which will try and integrate that patch into the master branch, after all the tests have passed.

Bake is also the name for a Vala build system: https://launchpad.net/bake

ReplyDelete> Bake scales up so that even if you have 5 hours of testing and 50 commits a day it will not require 250 hours of computation per day. In order for Bake to prove that a set of patches pass a test, it does not have to test each patch individually.

ReplyDeleteDoesn’t that hurt bisectability if there untested patches reach the repository?

Luca: I didn't know that. I suspect Bake has been used for several build systems over time (anything *ake seems to be quite popular), so I'll make sure to always write "Bake continuous integration" and hopefully that won't confuse people. I might also add a link to make it quite clear the projects are unrelated.

ReplyDeletenometa: Yes, it hurts bitsectability a bit. But already most people only CI test on a push, not on each commit, so there are plenty of untested commits. Even with my small projects testing each commit would be infeasible. So it removes a few known good points, but hopefully it's not significantly worse in practice.

> Patches are submitted to Bake, but are not applied to the main repo until they have passed all their tests. There is no way for someone to "break the build" - at all points the repo will build on all platforms and all tests will pass.

ReplyDeleteThis is how the CI from koalitycode.com works. However, they just got bought by docker and will shut down, so your claim to uniqueness may end up holding.

Travis CI integration with pull requests on github ends up providing a similar result. Which style is better depends on your particular use case.

Greg: I should probably tone down the word unique. I'll do that in the README, which I basically pasted here.

ReplyDeleteTravis CI + GitHub provides a similar result for untrusted teams, where the contributors have their code reviewed. Doing it with your own patches would be too much work. You want to automate the pressing of merge when it has passed, which Bake does.

Hi Neil,

ReplyDeleteReally interested in seeing and using this!

I like the idea of committing after the build is proved to pass. This what I achieved with gerrit + Jenkins, with the additional benefits of having a code review gate, something that I found of great value for distributed (in space or time) teams for which pairing is hard. The nice thing with gerrit is that each commit triggers a build so bisectability is not hurt. My project was small enough (and I strived hard to keep it that way) that build time was not an issue.

But then you can combine gerrit + zuul (that's what mediawiki and openstack do) to 1/ parallelise builds and 2/ stop early builds that contain a failed commit.

I would not have been so categorical few years ago but now I think having each commit built and passed before merge is an absolute must (eg. bisectability is not an option IMHO), having wasted hours to track down bugs in stack of commits.

Arnaud

Arnaud: I've been looking at it, and it does seem I could do incremental building on each commit, but just not run the tests on each commit - that gives you most of bisectability, but saves you the time of running the tests (which can be hours).

ReplyDeleteI totally agree that code must pass before merging, in my case because if you have a multi-developer team you need the person who wrote the bad code to have the problem of fixing the bad code, not the other people who need to get their code included.

IMHO, this should be left to the discretion of the user. The definition of what qualifies as a valid build is highly dependent on your particular context. But this might already be the case :-)

ReplyDeleteOf course! The entire system is parameterised over state, patches and tests. There is no inbuilt knowledge of anything about what any of the tests do, and things like Git integration is added around the outside. Polymorphism basically makes it impossible to cheat and shove such knowledge in the internals.

ReplyDelete