Having done "wc -c" as the previous benchmark, the next thing to look at is "wc -l" - line counting. A line is defined as being a sequence of non-newline characters, optionally ending with a newline. This means that "test" has 1 line, "test\n" has 1 line, "test\ntest" has 2 lines. Its trivial to express this in Haskell:

print . length . lines =<< getContents

The Haskell code uses the same getContents as before, but the C code needed more changes:

#include "stdio.h"

int main()

{

int i = 0;

int last = 0;

while (last = getchar() != EOF) {

if (last == '\n')

i++;

}

if (last == '\n')

i--;

printf("%i\n", i);

return 0;

}

The C code is quite hard to write. In fact, this code is the original version I wrote - with an accidental mistake. Rest assured that I benchmarked with the correct code, but see if you can spot the mistake.

As in the previous example, I've demanded that all implementations use getchar at their heart, to make everything a fair comparison. The benchmark was then line counting on the same 35Mb file as previously:

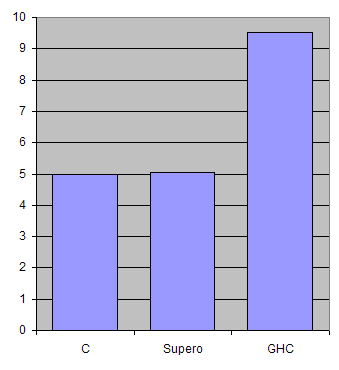

And the numbers: C=4.969, Supero=5.063, GHC=9.533. This time the difference between C and Supero is a mere 2%. However the difference between Supero and GHC has grown considerably! The reason for this is an advanced optimisation that has managed to remove all the intermediate lists. In a normal Haskell program, getContents would build a list of characters, lines would take that list, and build a list of strings, then length would take that list and consume it. With my optimisation, no intermediate lists are created at all.

While in the previous benchmark it was easy to examine and understand the generated GHC Core, for this one it is much harder. The benchmark has ended up using a recursive group of functions, none of which use unboxed numbers. For this reason, I suspect that the benchmark could be improved further for Supero. Once again, credit needs to be given to GHC for the results - it is clearly doing some clever things.

This benchmark is slightly more interesting than the previous one, but is still a bit of a micro-benchmark - not following a real program. My next task is to probably move on to "wc -w" to complete then set, then a real benchmark suite such as nobench. I hope that this demonstrates that Haskell can be beautiful, pure, and match C for speed in some cases.

15 comments:

Very nice!

How do your optimizations affect code size?

#include "stdio.h"

int main()

{

int i = 0;

int last = 0;

while ((last = getchar()) != EOF) {

if (last == '\n')

i++;

}

if (last == '\n')

i--;

printf("%i\n", i);

return 0;

}

I'm far from a C expert, but I don't totally get this part:

while (last = getchar() != EOF) {

if (last == '\n')

i++;

}

if (last == '\n')

i--;

How can that last 'if' ever be true, since it'll only get to that part of the code when the while condition is false, and the while condition is only false if 'last' is EOF. Or is it somehow possible for 'last' to be both \n AND EOF at the same time?

I'm sure it's a stupid question, but I'm curious about it.

Thanks!

Ludo

Ludo, I do believe that's the "accidental mistake" Neil mentioned.

Anon1: It tends to reduce code size a bit, in the examples I've tried. Code size can only properly be measured on large programs though, so I'll wait a while before giving any results on that.

Anon2: Correct, that is the mistake - I missed some brackets. Purely because its been a while since I used C full-time, and had forgotten the priority rules for operators. The lack of decent types in C means I missed it, but caught it the very first test run.

It is not entirely fair on Haskell since the C header files normally define getchar as a macro which is expanded inline, but also export it as a regular function which GHC is calling.

I'm also curious about under what circumstances the last if will be true.

When I compile the first version with -Wall I do get a warning showing what's wrong.

Ah, it seems on my system (GCC 4.1.2/Glibc 2.5), getchar() is a regular function call. You could insert "#undef getchar" after the #includes to make sure.

That is not enouth good for C. You take one char after another. You should use a buffer (of 2048 for example). It would be very faster, and faster than GHC's one.

fridim: You could use a buffer for either, and it would speed both up by similar amounts. By using no buffering you make the test a more consistent basis.

-- Neil

Gwenhwyfaer: apparently the mistake was the missing parentheses.

So I'm still wondering what the last 'if' does, just like anon in comment #7. Neil, if you could clear that up for us, that'd be great :-)

Ludoa: eek, I've got that wrong - yes, that if will always be false. It doesn't effect the benchmark I was running, but I'll fix it up.

Hi Neil, isn't the output of your code (modified to fix the other mistakes mentioned) 0 for the first sample input you provide, "text", with no newline? It seems that you want to start your count at 1. (But then you have to check for a completely empty file, too.

The optimization is as good as it gets on this example, since the code hangs on the standard C libarary's getchar() function, and uses almost all time in that function. Any improvement on this benchmark will require to rewrite the C code or the Haskell runtime to use other functions. I rewrote the C code using a buffer and using memory maped ID (mmap). Rewriting the Haskell runtime requires a bit more than I'm willing to spend here though. I have a Core2 duo, and tested on a 1GB file (32 MiB is too small to get a realistic test)

The results are as follows:

"getchar()": 14.3s

buffered: 5.5s

mmaped: 2.1s

The standard C library is in other words quite slow, and this is quite typical in many OSes, and most nontrivial C applications would have used buffered or mmaped IO. It is still quite impressive, since you basicly have managed to get the program IO-bound, in the sense that it hangs on the runtime.

Alex: The code is not very good, and doesn't work much... I'll blog a fix shortly.

Anon: Thanks for the further benchmarks, very useful!

Post a Comment