- Using much larger data sets, to increase the accuracy.

- Making sure all the tests are consistent, and that the playing field is level.

- Making the benchmarks repeatable, not relying on invariants that GHC does not promise.

- Understanding the precise results, I want to know why a particular benchmark gives the results it does.

- Improving the performance, where increased understanding enables this.

1) I've increased the default data set to 45Mb. This should give more accurate results. I've also benchmarked on a variety of computers, and found that the relative difference between processor and disk speed makes a massive difference. All the results given here are from my work machine. On my home machine Supero is up to 4 times faster than GHC!

2) It turned out that using -fvia-C meant that the gcc back end to GHC was inlining some primitive functions. I've now moved to using -fasm with ghc, which results in no inlining. I've also set appropriate definitions in the C to turn of inlining of their functions. With all these steps, no programming language does any inlining inside getchar() or isspace(). Since all programs do exactly the same number of calls to each function, this should not benefit/penalise any language, but is more consistent.

3) I've moved from using my fake version of the IO Monad, to using GHC's IO Monad. This change means that GHC no longer attempts to optimise my computations into constants, and means that future versions of GHC are guaranteed to behave in much the same way. This also has the side effect that the code should be faster (it isn't, discussed next, but it should be!)

4) With more detailed and repeatable benchmarks, I've started to look for the precise reasons why a particular benchmark performs as it does. In doing so I've noticed that GHC can place heap checks in the wrong place, sometimes fails to infer the correct strictness information and has too many stack checks. I have reported each of these issues. The benchmarks are performed with these problems present in the Supero results. As far as I am able to tell, if these three issues were solved, Supero would always obtain the same speed as C (with the same assembly code), or outperform C (in one case).

5) I discovered an issue with the words function, which I have brought to the attention of the Haskell library maintainers. The words function as currently in the libraries performs two redundant isspace tests per word detected. The fix is simple, and has been applied to the libraries Supero uses. Note that the GHC result has not had this fix applied, so could be expected to improve.

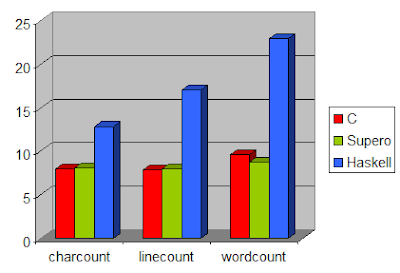

With all these modifications, it only remains to post updated benchmarks:

The benchmarks follow an expected pattern for character counting and line counting. The C version is minimally faster than the Supero version, 1.5% faster in both cases. The Haskell version lags substantially further behind.

The one benchmark where this is not the case is word count. In this benchmark Supero is 10% faster than C! This even includes the overheads of missed strictness, excessive heap checks and excessive stack checks. Obviously this is a surprising result, so deserves more explanation. The C code which performs word counting is:

int main() {

int i = 0;

int c, last_space = 1, this_space;

while ((c = getchar()) != EOF) {

this_space = isspace(c);

if (last_space && !this_space)

i++;

last_space = this_space;

}

printf("%i\n", i);

return 0;

}

There are essentially two states - traversing through a sequence of spaces, or traversing through a sequence of non-spaces. Depending on which state you are in, and where you are transitioning to, you may need to increment a counter. The C code maintains this state information in last_space.

However, this is not the fastest method. If that 1-bit of information was encoded in the program counter, i.e. by having different paths for being in a sequence of spaces vs non-spaces, the code can be further optimised: the last_space variable becomes redundant; the increment test can be eliminated in one branch.

To implement two tight inner loops in C, where control can bounce between the loops, is not trivial. One approach is to use goto, but this often disables some optimisations. The other approach is to have nested while loops, with a return to exit the inner loop. Either way is unpleasant, and unnatural.

Contrast this low-level hackery, with the Haskell version:

main = print . length . words =<< getContents

The code is specified in a high-level manner. By running this code through Supero, it automatically produces the necessary pair of tight loops, with transitions between them, using tail calls. GHC takes this code and produces directly looping code, which is able to outperform the C equivalent.

My next step is to take the Supero program, and try it on a larger range of benchmarks. I hope to tackle the nobench suite, competing against other Haskell compilers, rather than against C.

13 comments:

Why not try writing a C implementation of the program requirements that's actually fast? Your C program would be slow because it's reading one character at at time from the input device. Your entire post is nonsense.

Hot: All test programs using getchar to get each character, one at a time. The C program could be faster by reading multiple characters at once, but exactly the same could be done for the Haskell. Removing buffering for all languages simply makes the testing more fair.

-- Neil

getchar() isn't unfair, actually -- C libraries almost always implement it as a macro which expands to something like:

ch = --stdin->cnt >= 0 ? (int)(*stdin->ptr++) : _filbuf(stdin);

I.e., most of the the time just two or three machine instructions to decrement and test the counter and get a char from the buffer and decrement the pointer -- and you have buffered I/O.

There is nothing fair about this. This is a fool producing an incredibly biased benchmark.

Anon: Could you expand? I'm very interested to hear specific criticisms, but its hard to address vague ones.

I would be interested in the performance differences with as many optimisations enabled as possible in C and Haskell. If I have understood your aim, you would probably want to post results with optimisations on and off.

I think you're missing the point by using such an obviously IO bound test case. There is no way "to make it fair" by trying to match the character reading functions or whatever you're trying to do. As suggested above the only way to make a useful comparison would be to optimize both and see what happens.

For IO bound problems you can always speed it up by using (in order of increasing complexity and speed): a line buffer, a large blocked buffer, raw file operations, overlapped asynchronous IO with a decent read-ahead distance, cache block copying, using OS systems to get the MMU to eliminate any copying in the first place.

All these things are possible in C. Admittedly they would bloat your program a lot. My point is there is no "base line" to compare against with IO. Currently this is the sort of thing that C and to a lesser extent Fortran are clear winners at.

Why don't you do something that's compute bound instead? A much more useful comparison. There are some good compute-bound benchmarks at: http://shootout.alioth.debian.org/debian/index.php

Alex: I am perfectly aware that a compute intensive benchmark is much better for comparing languages. These are not the final benchmarks, merely short and simple programs on the way towards them.

Fair enough. I was just saying that your point 2) above, plus the fact you considered 10% difference significant seemed to suggest you were reading a bit too much into it. Best of luck with your project.

Have you tried using getchar_unlocked() in the C tests?

Alternatively, have you tried a gcc/libc combination that's a couple of years old, from before they started making stdio thread-safe?

The thing is, getchar() is usually NOT a macro these days.

alex is sort of right regarding I/O but the reality is more complex than that. If the I/O ends up as read() calls you don't want to use a blocksize that is too big relative to the size of the CPU cache(s) lest you incur cache misses both inside the OS when copying from the pages where it cached (parts of) the file to the program's read buffer AND when the program traverses said read buffer.

Any decent OS will automatically start prefetching a file if it isn't completely in memory when a program uses read() to read it linearly. That's quite cheap in terms of CPU cycles.

If the file is already completely read into memory by the OS and fully (OS) cached then it is faster to mmap() the whole thing (or as much as will comfortably fit into the app's memory space) because it will only induce one CPU cache miss per byte (i.e. per cacheline). It will also be prefetched nicer into the CPU cache if there's only a single "access stream".

So what do you do if you don't know whether the OS has cached the file or not? You could try using fadvise() and madvise(). fadvise() is not actually necessary unless you want to tell the OS to throw the file cache away afterwards (useful for MP3 players and things like file indexers). The automatic prefetch works extremely well so it's very hard to give better hinting.

madvise() is the same thing for memory-mapped files where the prefetch doesn't work as well and where the mapping is usually lazy which incurs more minor page faults than one would like.

Unfortunately, madvise() is hard to implement and seldom used so it generally simply blocks until OS has read enough into its file cache :(

The optimal thing to do is either to do something simple (i.e. use read() with a smallish buffer) or use a helper thread to issue the madvise() call(s).

Asynchronous I/O is a bitch to work with and it tends to circumvent the automatic prefetching. Using several threads and making them read linearly but at a fixed distance from each other is easier to implement and be faster than a single read thread (by allowing harddisk I/O in an order more suited to the layout on disk) but it depends on how that interferes

with the prefetching and on the actual layout on disk. I'm not convinced it is a good idea unless the file is spread out on more than one spindle or is severely fragmented.

(blogger "helpfully" only prints the time of day for these comments so I'll add here that I wrote this on 2007-11-30)

Peter: I'm running these benchmarks on Windows, which doesn't have getchar_unlocked in my particular version of glib. You can use preprocessor defines to either switch on or off the multithreaded versions, and also separately whether CRT functions get inlined. I've set both to off, to get a fair comparison.

I know I could speed up the reading, but I'm not sure I could do it in a consistent manner.

Neil,

Why don't you put the text in an internal memory buffer, timing only the counting operation using this buffer?

anon: That would change the constant overhead of accessing each character, so speed everything up, but since I'm doing a comparison it doesn't make much difference. The cost of reading characters is a sunk cost, the question is how much more effort the program takes.

Post a Comment