I was browsing through the Yhc standard libraries, as one does on the weekend, and was drawn to Yhc's sort function. It had some undesirable characteristics for one of the projects I was working on, so I wondered if other Haskell systems used different implementations. I checked GHC, and discovered it their sort was different. In general, when Yhc and GHC have different implementations of a standard library function, the GHC one is better tuned for performance. I decided to replace the Yhc sort function with the GHC one, but before doing so, thought a quick performance test was in order. So I came up with something simple:

test = do

src <- readFile "Sort.hs"

print $ ordered $ sort $ sort $ reverse $ sort src

The file "sort.txt" was simply the source code to the program. The code sorts the contents of this file, then reverses it, sorts it and sorts it again. This means that the program performs one sort over semi-random data, one over reverse-ordered data and one over ordered-data. These are some fairly standard cases that should be checked. This test is not a comprehensive benchmark, but a nice quick indicator.

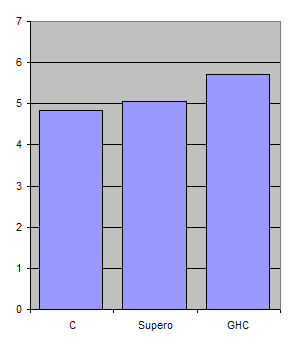

I ran the Yhc sort function against the GHC version, and was shocked to find that the Yhc code was twice as fast. I ran the benchmark under Yhc, GHC and Hugs (using reduction count in Hugs), and in all cases the performance was doubled. I was not expecting this result!

The code for the GHC sort is:

mergesort :: (a -> a -> Ordering) -> [a] -> [a]

mergesort cmp = mergesort' cmp . map (:[])

mergesort' :: (a -> a -> Ordering) -> [[a]] -> [a]

mergesort' cmp [] = []

mergesort' cmp [xs] = xs

mergesort' cmp xss = mergesort' cmp (merge_pairs cmp xss)

merge_pairs :: (a -> a -> Ordering) -> [[a]] -> [[a]]

merge_pairs cmp [] = []

merge_pairs cmp [xs] = [xs]

merge_pairs cmp (xs:ys:xss) = merge cmp xs ys : merge_pairs cmp xss

merge :: (a -> a -> Ordering) -> [a] -> [a] -> [a]

merge cmp [] ys = ys

merge cmp xs [] = xs

merge cmp (x:xs) (y:ys)

= case x `cmp` y of

GT -> y : merge cmp (x:xs) ys

_ -> x : merge cmp xs (y:ys)

The function works by splitting the list into one element lists, resulting in each basic list being ordered. These lists are then merged in pairs until a single list is left. For example, given the input "sort":

"s" "o" "r" "t"

"os" "rt"

"orst"

We first split each character into its own list, then merge adjacent pairs. This code corresponds to the standard merge sort. But instead of making each initial list a single element, we could use sequences of increasing elements, for example using the risers function:

risers :: Ord a => [a] -> [[a]]

risers [] = []

risers [x] = [[x]]

risers (x:y:etc) = if x <= y then (x:s):ss else [x]:(s:ss)

where (s:ss) = risers (y:etc)

Now, if we apply risers "sort" we get ["s","ort"]. We can now follow the same merge procedure as before:

"s" "ort"

"orst"

Instead of doing 3 merges, we have done only 1. Given the input "abcd" the effect would have been even more dramatic. We can refine this scheme further, by detecting both ascending and descending chains of elements in the initial list. This technique is used by Yhc, and is based on code originally written by Lennart Augustsson. Knowing the original source of the code, my shock at the performance benefits offered by the Yhc version have decreased substantially.

The GHC sort function should probably be replaced by the one from the Yhc libraries. This would offer increased performance, especially in the case of ordered or reverse-ordered lists. The asymptotic complexity of the two sorts means that there must exist some value of n such that sort [1..n] runs faster in Yhc than GHC (assuming sufficient stack/heap for both). I wonder whether Int32 is capable of expressing such a value...

A side note: I have been playing with the risers function for several years. I've used it as an example of pattern match checking, both specialised to Int and on a general Ord class. I've used it for supercompiling. It's appeared in blog posts, a TFP paper (Mitchell+Runciman 2007), a Haskell Workshop paper (Xu 2006) etc. I originally had this example suggested from a functional programming exam paper, but only today at lunch did I discover its true origins. The risers function had originally been the first-step in a merge sort!