I was reading

JFP this weekend, and read the paper "

Spreadsheet functional programming" by David Wakeling. As a Windows user who has done a lot of work with Excel, I thought it was great. People before have either tried to pervert Excel into a functional language, or write a new spreadsheet - and given that Open Office spreadsheet sucks totally - this seems to be a hard challenge.

Despite being a lovely concept, I thought the implementation described missed the mark in a few places. These are not academic reasons, but reasons of practicality for a production system and integration with the underlying Excel. I fully expect that the reasons stem from missing a deep understanding of Excel - something few academics would be likely to master. However, my Dad is a Chartered Accountant, and I've been developing systems based around Excel for over 10 years, so can fill in the Excel half.

Spreadsheet Functional ProgrammingBefore describing how I would implement the ideas in the above paper, its best to recap the paper a bit, for those people who can't be bothered to read it. (Note: the above paper is very accessible, even a novice programmer should be able to get something from it - so skip this summary and read the paper!)

The basic idea is that Excel should be able to call Haskell, so:

=Haskell("1+2")

should call off to Haskell and evaluate 1+2, hopefully returning 3. Naturally that sort of thing could be done in Excel much more conveniently, so the strength should be in definition of proper Haskell functions within Excel:

f :: Int -> Int -> Int

f a b = (a + b) / 2

=Haskell("f 1 2")

(imagine a more complex example for f, I'm too lazy to think of one, but the paper has one)

Obviously, =Haskell should go in the Cell you want the result to appear in, but what about the definition of f? This paper chooses to place definitions of f in comments, a neat solution that keeps them in the document, but out of the small cells, which would be inappropriate.

This is extended by allowing users to write references such as A1 in their Haskell strings. Unfortunately this shows up a weakness - now dragging formula around does not automatically update their references as it normally does in Excel, since the reference is hidden from Excel inside a string.

The final point to mention is that the evaluation is done with Hugs, by writing out a static file and running it - something I believe could end up being too slow for practical use. Whether it is or not isn't something I can comment on, having not tried it in real life.

HsExcelReading the paper, there are lots of things which are done spot on, and seem obvious in retrospect. The best ideas always seem good in retrospect, so I consider this a very good thing. Unfortunately I see 3 issues:

- Writing code in comments looks highly unpleasant

- Haskell is in strings, which enforces separation between Haskell and Excel

- Not fast enough

I have come up with a design which I believe addresses all of these issues, which I have named HsExcel. I have not implemented it, so cannot guarantee it would really work, but I suspect it would not be that much of a challenge. I have no free time currently, but it is on my todo list (somewhere).

The first issue I shall tackle is writing code in comments. A much nicer solution would be to add a tab to Excel which contained Haskell code in a nice big box, perhaps also allowing Haskell code in comments if people found it preferable for small snippets. This can be done using Excel dialog panes with a bit of VB magic.

Next is the issue of Haskell in strings, instead of =Haskell("f 1 2") I would write:

=hs("f",1,2)

Here the Excel will evaluate 1 and 2, and pass them to the Haskell function f. If 1 was A1, then it would behave like a normal A1 in Excel. Perhaps allowing =Haskell as well would be nice, but I think the common case would be =hs. This also allows better flexibility, nested Excel and Haskell functions together, intermingled. I also think this will make cell dependency updating work (an issue not covered in the paper).

Once this has been done, Haskell could operate on any Excel values, including Cell Ranges etc. Unfortunately the paper is silent on the issue of marshaling Excel data to Haskell, but I suspect it could be done relatively easily.

One nice feature to add would be that =hs_f(1,2) works, just like an Excel function. I'm not sure if VBA is permitted to add primitive functions to Excel, or just addons. I'm also not sure if addons can dynamically change the available functions. If this was the case, then all entires in the Haskell source could become first class Excel functions.

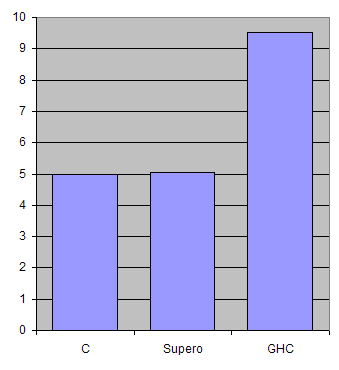

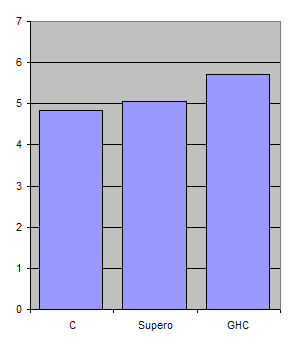

The final question is the issue of speed. Currently the implementation runs the string from the beginning each time, writing out fresh values for the changed cells. I think spawning a shell and talking to an interpreter with each request would be higher performance. Once this solution was adopted, either GHCi or Hugs could be used, potentially allowing for a further speed up. The author comments that this is hard to do using VB's Shell command, and indeed it is. However, it is possible to prod standard Win32 system calls from VB, albeit with a great deal of work, which could get this working properly.

ConclusionThe paper presented a lot of good idea, I think a bit of user interface polish could bring them to the fore. HsExcel could be a useful tool - I certainly have projects I could use it for. I suspect that it would be under a weeks work for anyone already fluent in Haskell and (more importantly) Excel/VBA. Any volunteers?